Unveiling Unspool

I am pleased to announce the side project I’ve been working on for the past 3 months, and hope to release to the public some time this year. It’s called Unspool, and it exists because I’ve recently got back into film photography and wanted a metadata tagging app that doesn’t make me want to throw things.

I intend to write a developer blog on my experiences building Unspool at least once a month, to give me accountability to:

- write something on this Web site more regularly

- finish Unspool and release it to the public. I haven’t decided what I’m going to do with it yet (open-source? freeware? on the App Store for £4.99? postcardware?) but right now, and for the foreseeable future, Unspool is a hobby which I work on in my free time. I certainly don’t ever expect to make a living from it.

What it does

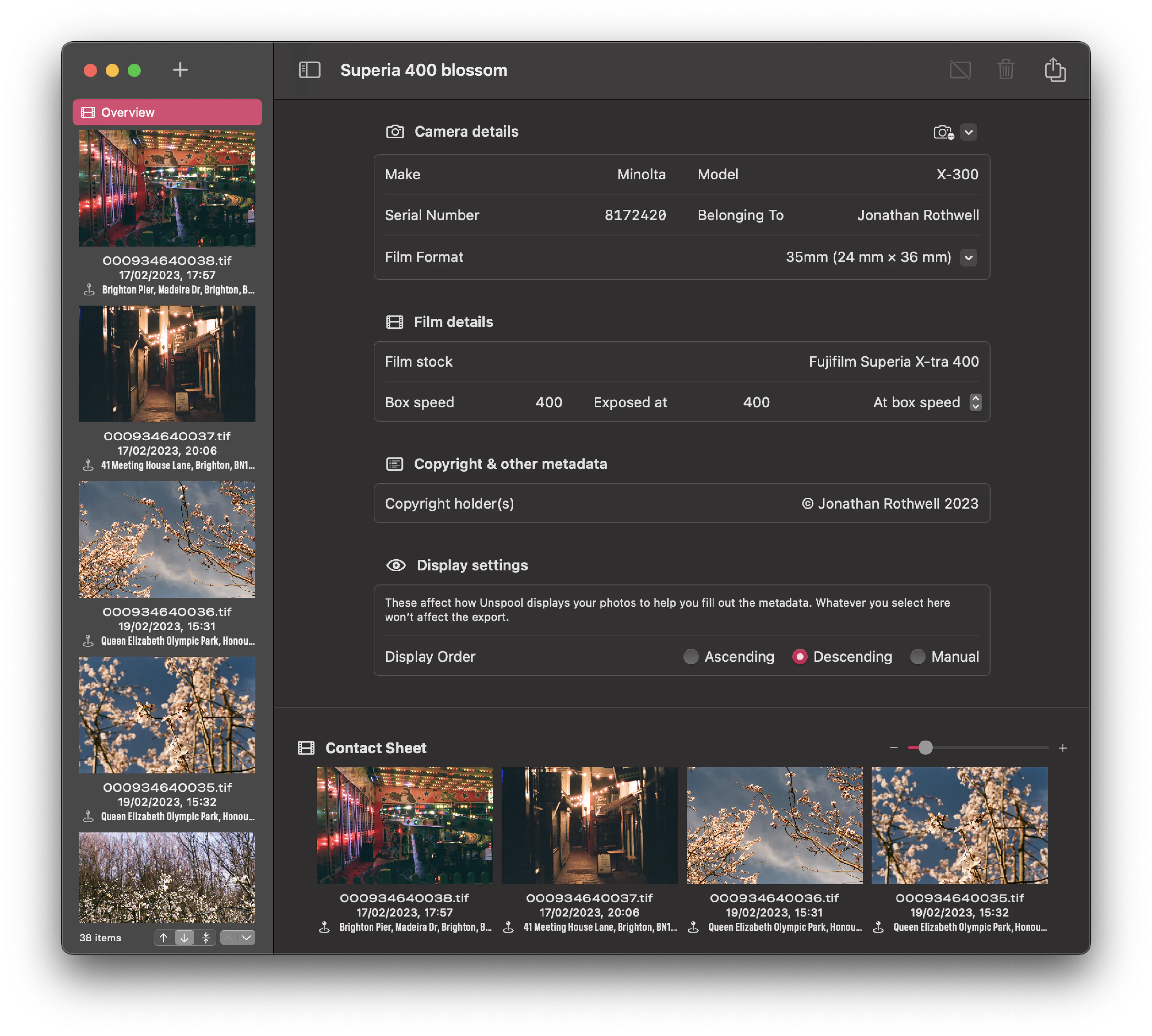

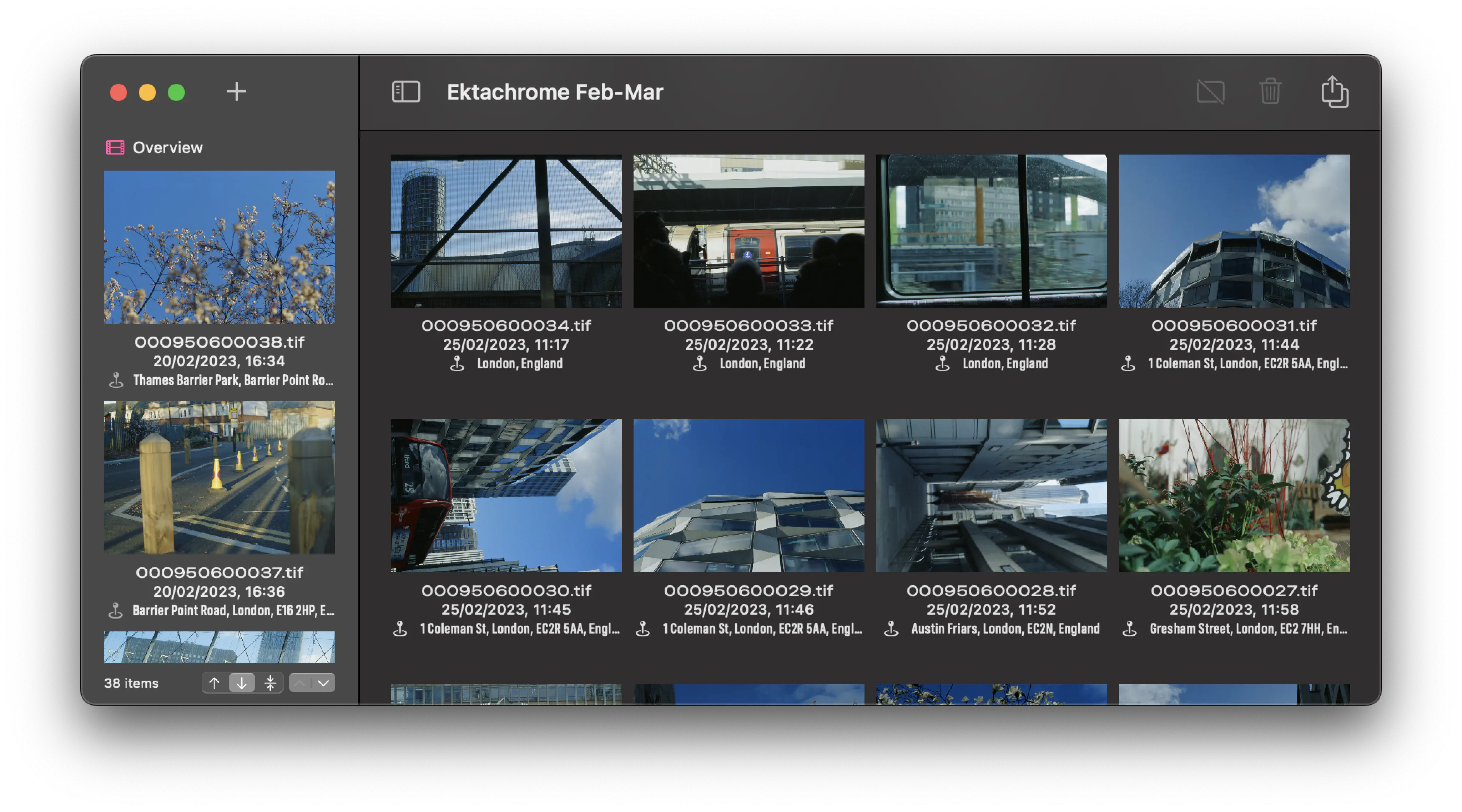

Unspool is a document-based app for MacOS. Each document represents a single roll or pack of film that was developed and then scanned into a computer. You bring your scans into the app by dragging them onto the window from the Finder or by using the conventional system Open panel.

You can then specify metadata for the entire roll, such as:

- The camera

- Make & model

- Serial number

- The owner’s name

- The film format (this is used to set Exif data about the focal plane)

- The film stock

- The box speed

- The speed the film was actually exposed at

- If you pushed/pulled the film, how many stops. This is used to set the Exif exposure compensation value—I’m still not quite sure how this will work, since although metadata specifications tend to have a concept of exposure latitude, they don’t have a way of encoding a ‘recommended ISO speed’ and an actual speed.

- A copyright holder to encode in the Exif data

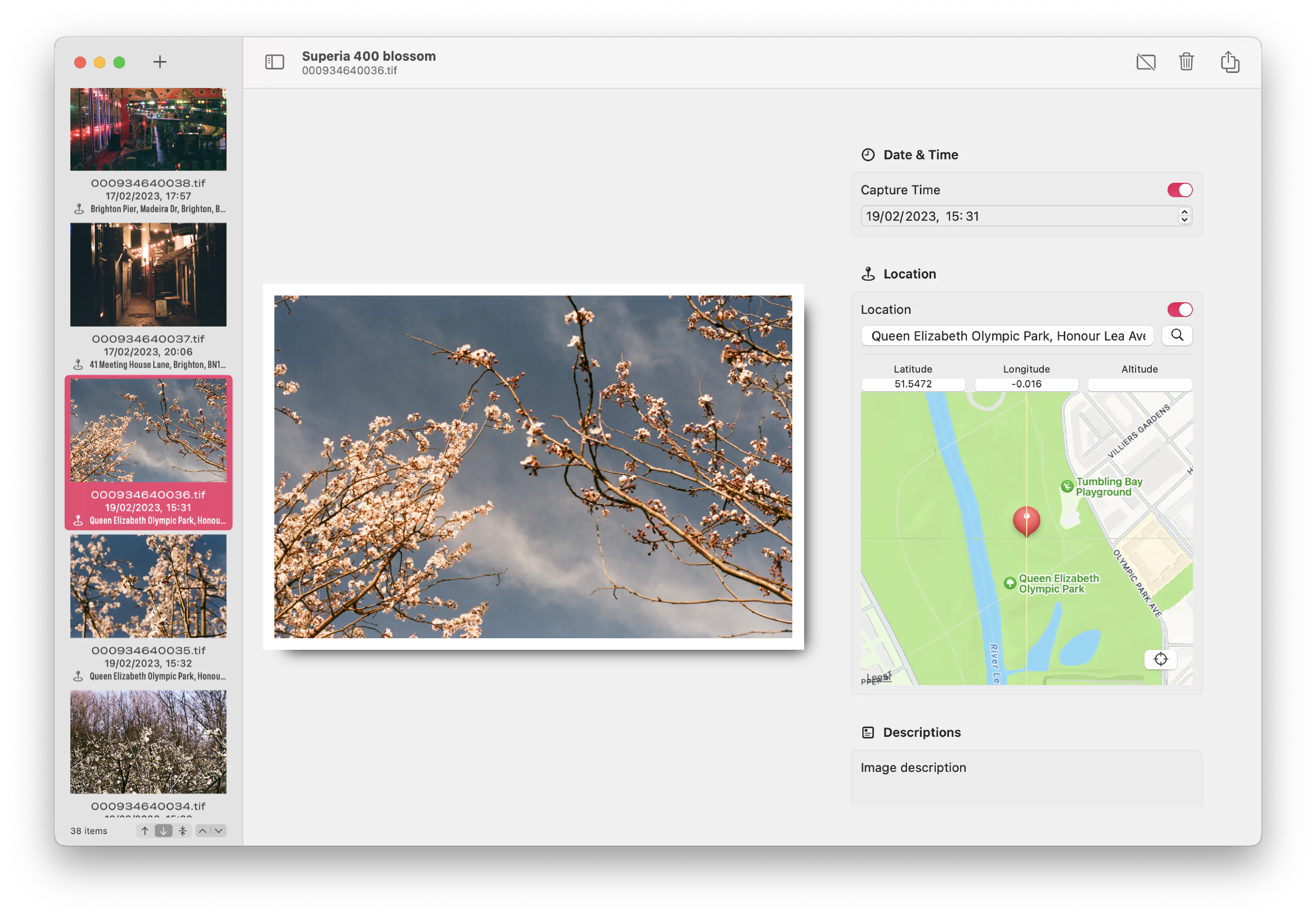

You can also set certain values for each exposure:

- Capture date and time

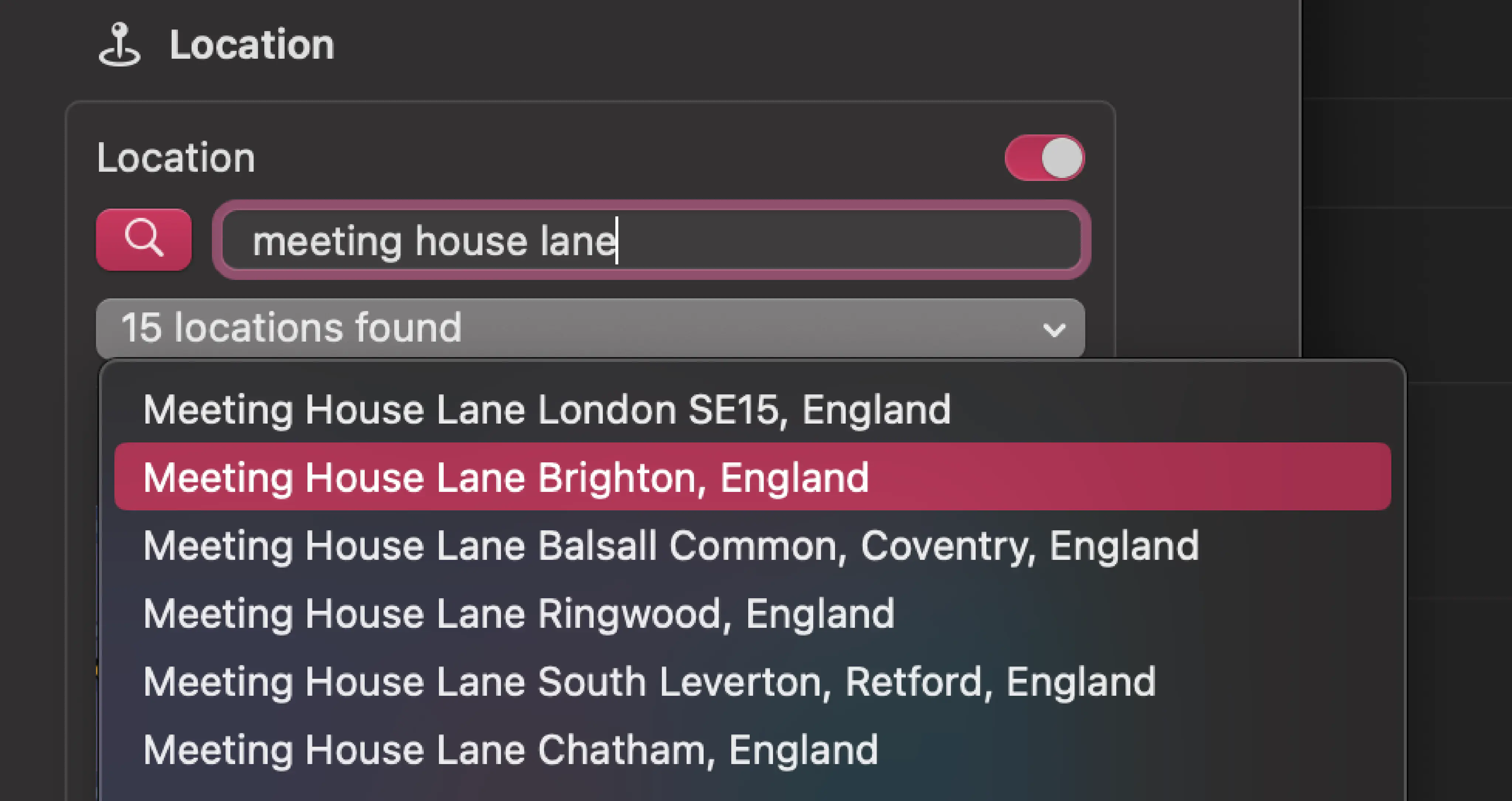

- Location

- Search Apple Maps

- Enter latitude/longitude

- Altitude

- An image description and alt text

You can also mark shots as a ‘dud’, e.g. if you think it’s a rubbish shot, or it’s under-/over-exposed, or there’s camera shake, or you left the lens cap on, etc. At your option, when you export from Unspool, duds will be ignored.

Unspool also tries to make an educated guess about the time your photo was scanned, using the creation date from the file system and encoding this as the digitisation time in the Exif data.

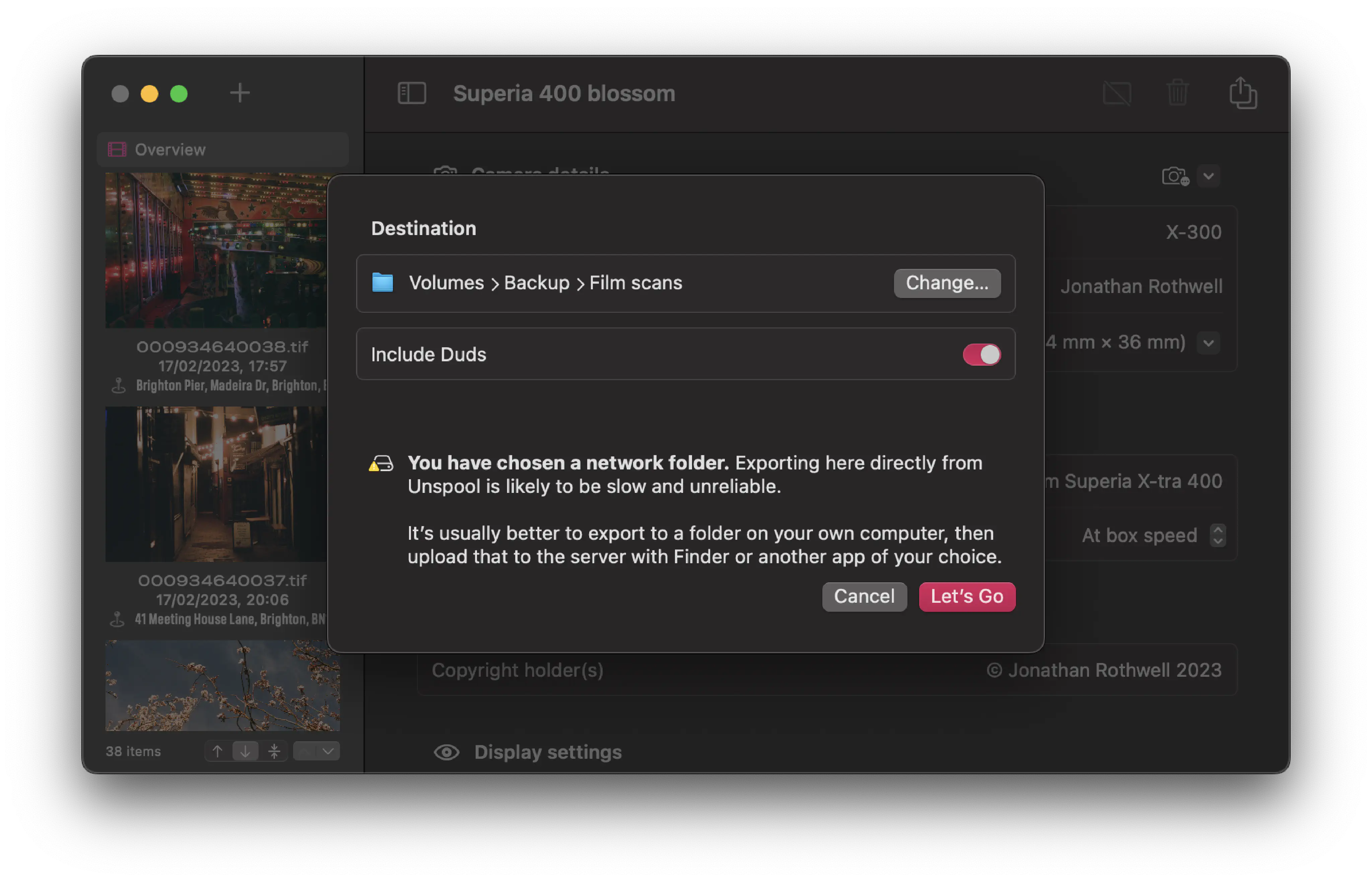

Exporting currently only happens to the file system, but will eventually also allow you to dump directly into your machine’s Photos.app library. This, combined with a file system export to a backed-up location, will also mean that you can immediately trash the Unspool document to free up space on your computer. In principle, you can back up to a mounted network drive (although this is probably not a great idea for choppy connections or enormous rolls.)

How it works

It is a SwiftUI app, using the modern (and at times rather spotty) APIs for toolbars, documents, navigation views, etc. This is partly because it’s what I’m familiar with and enjoy using in my day job building iOS apps; I’m also interested in feeling out the corners of building a modern MacOS app. In time, I hope I’ll be able to support iPadOS too (in principle this is one of the things SwiftUI is good at) but right now, I’m focusing on building something that works well enough on the Mac to use on a regular basis.

The main scene is a DocumentGroup with each document representing a single roll of film that’s been developed and then scanned. Documents are packages, a MacOS feature that allows directories to masquerade to the Finder as single files. Inside the document is a JSON index file where all the Unspool metadata lives, along with the original files as imported from the file system. All the image formats Core Image is happy with should be supported, although I have only tested JPEG and TIFF.

To avoid excessive RAM usage, images are loaded into memory only when being displayed or processed. I’ve not found a reliable way of keeping the thumbnails in memory yet, so scrolling tends to result in a bevy of loading indicators for a few seconds as the image is read from disk. In practice, neither of these is a problem on my own Apple Silicon machine, but performance is likely to be worse on an Intel computer—particularly those with less RAM, or even the older machines with a spinning hard disk.

The exporting process consolidates metadata for the whole document and for each individual image into a single massive CFDictionary, which is then assigned as properties to a CGImageSourceDestination for each exported image.

How I build it

Since I am the only developer working on Unspool (and likely to remain the only developer ever to work on it) I am developing principally on the main branch, only creating feature branches when I have a refactor or a feature that’s large enough that I might not want to do it all at once.

It builds on every push to main using Xcode Cloud (while it’s free, at least) and deploys automatically to a TestFlight internal testing group with each build. I will come up with some kind of release tagging scheme when it’s time to do a public beta.

There is a small suite of unit tests, targeted around the encoding/decoding of the index file, and also around assembling the dictionary of metdata properties.

Current status, March 2023

I use Unspool on my computer every time I get a bunch of scans back from the lab. It’s quite a bit faster than importing into the Mac Photos app, having to re-order the photos (complicated by the scans sometimes being numbered in reverse chronological order, and Photos’s annoying habit of importing in a random order) and then opening the Adjust Date and Time and Inspector windows for each image to set its location. I haven’t measured how much faster because I couldn’t really do a fair test—but it makes me less frustrated.

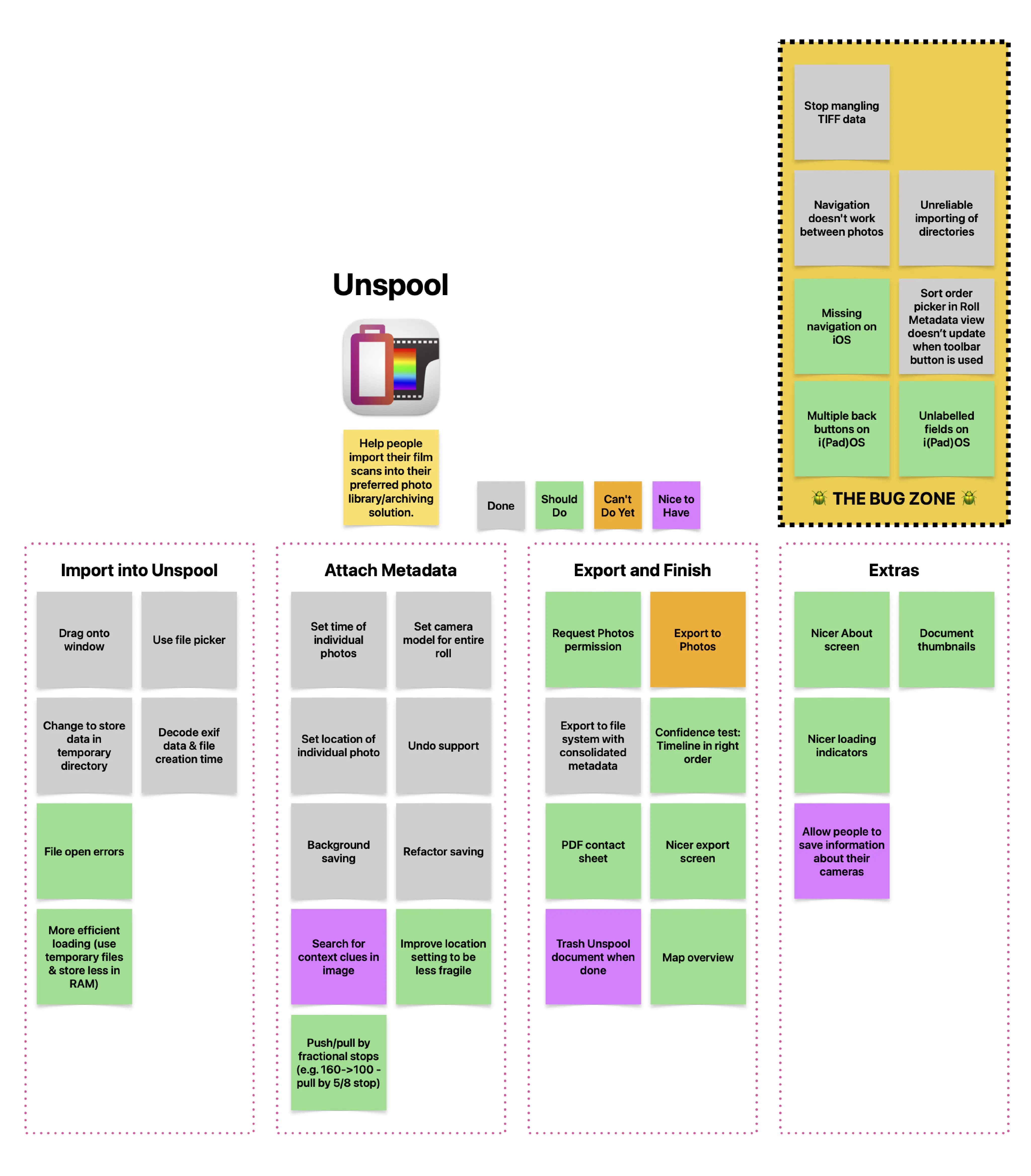

When I started this escapade just before Christmas, I made myself a story map of sorts in the Freeform app (Apple’s answer to Miro) which I have tried to keep mostly up-to-date:

I have started the process of trying to use GitHub Projects to manage the stuff I need to/want to do with Unspool, but this seems like overkill at the moment, given that I am simultaneously the sole developer, sole product/business owner, and also manage the quality of the project.

Talking of quality, I mentioned there was a small test suite. It’s much smaller than I’d like. Coverage currently sits at around 10%. The good news is that the most critical logic, around consolidating metadata, has about 95% coverage; much of the rest of the code is connective tissue. Nonetheless, I plan on holding off on any massive new features for the moment, instead focusing on stability, refactoring, and drastically improving test coverage—principally for unit tests, but also thinking about some integration tests that can act as a confidence test for a normal workflow.

There are also architectural changes in the pipeline. As I mentioned earlier, I haven’t found a reliable way to retain thumbnails in memory so that they display instantly when scrolling—that’s something I’m continuing to investigate. I also need to find a way to feed the original metadata from the file through the export process and populate the index on an import—particularly for the use case where the lab has rotated an image taken in portrait, for instance.

State of the platform

This has been the most substantial MacOS app, and certainly the most “traditional”, that I’ve ever built in anger. To Apple’s credit, the Swift language, and SwiftUI, and the vastly improved documentation, have made this a lot easier than it used to be when I first tried over a decade ago and ran up against Objective-C.

That said, of course there were issues—what software project is without them?—so I thought I’d take the time to list some of them here.

Gaps in SwiftUI

As of the end of March 2023, SwiftUI, while now substantial and a viable option for lots of things, still feels incomplete. This is to be expected—however, I was surprised by the extent to which there were gaps, particularly for things that feel idiomatic on the Mac but not on an iPhone.

For instance, a text box that offers a menu of autocomplete suggestions à la Music or Maps, or even Spotlight when accessed from the Finder? Apple’s most recent code sample of an autocomplete menu on MacOS was last updated in 2012 and was built for OS X Mountain Lion.

I could, of course, try implementing this myself. Some answers online seem to port this code sample to Swift. Some use a popover window. Each of these has its own advantages and pitfalls, particularly when it comes to support for assistive technologies. But I do wonder what the point of me doing this would be if a text box selection menu became part of the standard SwiftUI library in macOS 14.

For now, my temporary solution is to use a TextField with a Menu underneath, which is far from ideal, but at least usable with the keyboard:

The current implementation of Map also leaves much to be desired—it’s not really usable other than for displaying a pre-existing location. You can’t have people drop a pin wherever they like on the map. State management is awkward. Most sources online suggest wrapping NSMapView, which I am loath to do but may be inevitable in the long run.

Tables are now supported, but editable tables (by ‘editable’ I mean ‘the contents of each cell can be clicked and edited’) need take a bit more work, so I’ve put this off for now as well. DatePicker only allows the setting of a date or time to the nearest minute, with no option to specify seconds—this seems like an odd omission.

The biggest (and most annoying) problem I’ve had building a Mac app in SwiftUI has been with toolbars. Toolbar placements appear to be unreliable, with additions to the status placement seeming to move items from the primary position to the centre as well. Documentation seems to be largely focused on toolbars for iPhone.

It’s worth noting that much of SwiftUI simply provides an abstraction over existing AppKit/UIKit controls. This explains why I was getting crashes mentioning NSToolbar when I tried adding a toolbar() modifier to an individual detail view—I had assumed this would mean the item/group would disappear when that view disappeared, but it seems this is not the preferred way of implementing a toolbar that changes depending on the state of the main view.

The state of Xcode Cloud

Xcode Cloud is fast, and generally pretty good when used in the browser. At the low low price of free (until December) it’s hard to argue, particularly in comparison to comparable services from GitHub, Bitrise, and similar. I do like the sensible defaults—particularly for iOS UI tests to be run on a suite of devices rather than a single device, which can help catch layout defects.

However, integration with Xcode itself—something Apple should be very good at—is worryingly flaky. And sure, it’s only a moderate inconvenience to have to sign back into Xcode or trash your DerivedData directory to get builds to show up again. What’s more worrying is the number of crashes that seem to be caused by decoding errors when fetching build reports from the backend.

On a related note, tests are turned off in my Xcode Cloud configuration. This is due to a bug in Xcode Cloud that means any MacOS test target for an app with the hardened runtime results in… this beauty (it goes on the longer you look at it):

Unspool (3301) encountered an error (Failed to load the test bundle. If you believe this error represents a bug, please attach the result bundle at /Volumes/workspace/resultbundle.xcresult. (Underlying Error: The bundle “UnspoolTests” couldn’t be loaded. The bundle couldn’t be loaded. Try reinstalling the bundle. dlopen(/Volumes/workspace/TestProducts/Debug/Unspool.app/Contents/PlugIns/UnspoolTests.xctest/Contents/MacOS/UnspoolTests, 0x0109): tried: '/Volumes/workspace/TestProducts/Debug/UnspoolTests' (no such file), '/Applications/Xcode.app/Contents/Developer/Platforms/MacOSX.platform/Developer/usr/lib/UnspoolTests' (no such file), '/Volumes/workspace/TestProducts/Debug/Unspool.app/Contents/PlugIns/UnspoolTests.xctest/Contents/MacOS/UnspoolTests' (code signature in <A33477CA-C823-3BEA-8E03-C0F0E2C5A21E> '/Volumes/workspace/TestProducts/Debug/Unspool.app/Contents/PlugIns/UnspoolTests.xctest/Contents/MacOS/UnspoolTests' not valid for use in process: mapped file has no Team ID and is not a platform binary (signed with custom identity or adhoc?)), '/System/Volumes/Preboot/Cryptexes/OS/Volumes/workspace/TestProducts/Debug/Unspool.app/Contents/PlugIns/UnspoolTests.xctest/Contents/MacOS/UnspoolTests' (no such file), '/Volumes/workspace/TestProducts/Debug/Unspool.app/Contents/PlugIns/UnspoolTests.xctest/Contents/MacOS/UnspoolTests' (code signature in <A33477CA-C823-3BEA-8E03-C0F0E2C5A21E> '/Volumes/workspace/TestProducts/Debug/Unspool.app/Contents/PlugIns/UnspoolTests.xctest/Contents/MacOS/UnspoolTests' not valid for use in process: mapped file has no Team ID and is not a platform binary (signed with custom identity or adhoc?))))

Apple is aware, but the defect remains unfixed—hopefully this does not get forgotten or deprioritised for too long. For those who need to build a Mac app, this could be a dealbreaker.

What’s Next

As I mentioned above, right now I intend to focus on quality-of-life improvements and considerably increasing test coverage—particularly around the exporting logic, which (aside from the part that consolidates metadata) is not as well-tested as I would like it to be. This will probably involve creating some abstractions around the exporting process itself so that it doesn’t have as much of a dependency on the file system—also bound to make things easier when it comes time to build a direct export to Photos.

I also want to add a confidence check before exporting that the metadata has been filled out correctly. For instance, it makes sense that the dates in the roll should be sequential. If frame 5 was taken on Wednesday, frame 6 on Tuesday, and frame 7 on Thursday, that probably means we have a problem—and we should at the very least display a warning.

Finally, exports do not report their progress to the UI, instead executing on the main thread causing the app to hang—especially annoying on slow network drives, for instance. I need to work out a meaningful way to pass progress up to the UI while the export happens in the background.

Anyway. That is Unspool, as of March 2023. I hope you’ve enjoyed this little peek into a hobby that’s been consuming a lot of my headspace over the last few months. I intend to write more of these, about once a month—and generally I shoot anywhere between two and six rolls of film per month (depending on what the light’s like), so I’ll have plenty of opportunity to eat my own dog food!

(P.S. It is still a long way off, but if you shoot film, have a Macintosh computer, know how to write bug reports, and don’t mind a beta version occasionally mangling your metadata—you can shoot me an email and I can register your interest.)